There’s no quick answer to this question

The sad news came forth online as Tesla had published a story called "A Tragic Loss" on the brand's blog, where it announced the passing of a Tesla Model S owner during an accident that happened while the driver was cruising on a highway near Florida, with the Autopilot mode activated.

As both protocol and common sense demand, Tesla alerted the authorities – namely the NHTSA (National Highway Traffic Safety Administration) – and the federal authorities triggered an ongoing investigation on the incident.

Although we're looking at the first known fatality in just over 130 million miles where Autopilot was activated, the automotive industry is no stranger to self-driving car flops. But that's something carmakers and users should expect, and the existence of such incidents should be regarded as another warning on the fact that there's no such thing as a foolproof system, especially when it comes to complex contraptions as Tesla's Autopilot function.

Where things stand right now

Google had the same problem earlier this year, when after its autonomous cars were involved in 17 minor traffic incidents provoked by other drivers, a Lexus 450 hybrid SUV carrying Google's self-driving tech cut off a bus and lead to a fender bender. Still, the fact that Google's autonomous car fleet has more than 1.5 million miles under their belt without major incidents remains an exceptional feat, as long as the chance of things going south is still out there.

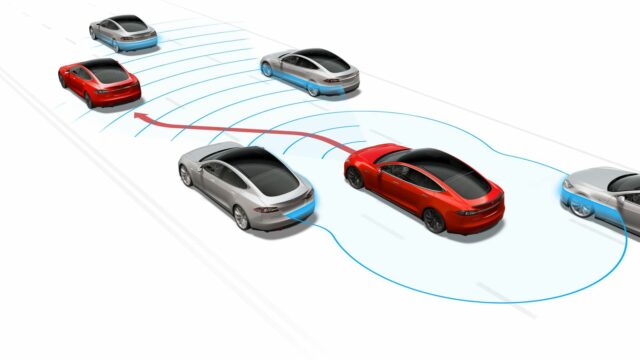

Then again, carmakers and industry experts have previously cautioned the public about the deceptive nature of such semi- or full-autonomous systems. For example, Tesla disables by default the Autopilot function found in the Model S, and to enable it, drivers must provide their assertion that they fully understand the Autopilot's beta phase as well as its new technology status.

Furthermore, on activating the Autopilot, the driver is prompted additional messages like "Autopilot is an assist feature that requires you to keep your hands on the steering wheel at all times," "you need to maintain control and responsibility for your vehicle” or "always keep your hands on the wheel and be prepared to take over at any time.”

However, the most worrying aspect is whether the driver was or was not paying attention to the road. We've often seen videos of people reading and sleeping behind the wheel of a Tesla Model S, trusting the Autopilot with their safety and, after all, life. There was even a prank where an Autopilot-imbued Model S was let roam freely on the highway, only to stir amazed reactions from the other drivers.

So far, it's not the case with this unfortunate incident, as Tesla states that "the vehicle was on a divided highway with Autopilot engaged when a tractor trailer drove across the highway perpendicular to the Model S. Neither Autopilot nor the driver noticed the white side of the tractor trailer against a brightly lit sky, so the brake was not applied."

What happens next?

Of course, it's still early to jump to conclusions, especially when there's a federal investigation underway which will, sooner or later, provide the exact cause of the accident. What I think it's vital for the time being has a lot to do with how the media but also other carmakers choose to approach the situation. Given it's the first incident connected to an advanced semi-autonomous function – advanced in the sense that it allows the driver to let go of the steering wheel – there might be some shadows of doubt on how reliable such a system is.

But even more important, it's an ideal time for the media and carmakers alike to focus on educating the people by helping them understand and, subsequently, accept new technologies, with both their pros and cons. It's quite clear that the self-driving car industry needs clear-cut guidelines and safety regulations. The irony coming from this is that this month (i.e. July 2016), the NHTSA was expected to release a comprehensive set of autonomous car guidelines as a reaction to this growing trend.

Because if we are to call ourselves ready for autonomous driving, then maybe we should all start adopting a cautious approach when it comes to innovations – not by rejecting them or giving them a reluctant welcome, but by first trying to sort out the good versus bad balance while focusing on understanding how a system such as the Autopilot can, ultimately, change lives – ours or those of others.

The ugly truth is – and I hope I'm wrong over this one – more incidents like this are bound to happen. Therefore, it remains to be seen whether the car industry will slow down this enthusiastic charge regarding autonomous driving solution or if such functions will only be made available once their failure rate is reduced to a minimum.